Study reproducibility is valuable for validating or refuting results. Provision of reproducibility indicators, such as materials, protocols, and raw data in a study improve its potential for reproduction. Efforts to reproduce noteworthy studies in the biomedical sciences have resulted in an overwhelming majority of them being found to be unreplicable, causing concern for the integrity of research in other fields, including medical specialties. Here, we analyzed the reproducibility of studies in the field of pulmonology.

Methods500 pulmonology articles were randomly selected from an initial PubMed search for data extraction. Two authors scoured these articles for reproducibility indicators including materials, protocols, raw data, analysis scripts, inclusion in systematic reviews, and citations by replication studies as well as other factors of research transparency including open accessibility, funding source and competing interest disclosures, and study preregistration.

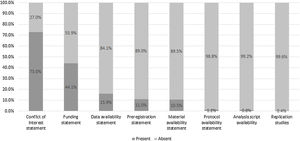

FindingsFew publications included statements regarding materials (10%), protocols (1%), data (15%), and analysis script (0%) availability. Less than 10% indicated preregistration. More than half of the publications analyzed failed to provide a funding statement. Conversely, 63% of the publications were open access and 73% included a conflict of interest statement.

InterpretationOverall, our study indicates pulmonology research is currently lacking in efforts to increase replicability. Future studies should focus on providing sufficient information regarding materials, protocols, raw data, and analysis scripts, among other indicators, for the sake of clinical decisions that depend on replicable or refutable results from the primary literature.

Key messages

What is the key question?

Are practices to improve study replicability and transparency being applied in pulmonology research?

What is the bottom line?

Current research in pulmonology is lacking in efforts to improve study replicability.

Why read on?

Study replicability is a fundamental aspect of the scientific method and practices to ensure this should be improved upon for the betterment of research that could eventually lead to clinical decisions.

IntroductionReproducibility—the ability to duplicate a study’s results using the same materials and methods as the original investigator—is central to the scientific method.1 Study reproducibility establishes confidence in the efficacy of therapies, while results that contradict original findings may lead to overturning previous standards. Herrera-Perez et al. recently evaluated 396 medical reversals in which suboptimal clinical practices were overturned when randomized controlled trials yielded results contrary to current practices.2 Given the evolving nature of evidence-based patient care, studies must be conducted in a way that fosters reproducibility and transparency. Further, materials, protocols, analysis scripts, and patient data must be made available to enable verification.

Efforts supporting reproducibility are becoming more widespread owing to the open science movement. In 2013, the Center for Open Science was established to “increase the openness, integrity, and reproducibility of scientific research”.3 The center sponsored two large-scale reproducibility efforts: a series of 100 replication attempts in psychology and a series of 50 landmark cancer biology study replication attempts. In the first, investigators successfully reproduced only 39% of the original study findings.4 In the second, efforts were halted after only 18 replications because of lack of information and materials from authors, insufficient funding, and insufficient time to perform all the experiments.5 The center also created the Open Science Framework, a repository in which authors may deposit study protocols, participant data, analysis scripts, and other materials needed for study reproduction. More recently, the center created Transparency and Openness Promotion Guidelines, which include eight transparency standards and provides guidance for funders and journals, and initiated the use of badges for journals that adopt reproducible practices.

Current estimates of study reproducibility are alarming. In the biomedical sciences, reproducibility rates may be as low as 25%.6 One survey of 1576 scientists found that 90% of respondents believed science was experiencing a reproducibility crisis; 70% reported not being able to reproduce another investigator’s findings, and more than half reported an inability to reproduce their own findings.7 The picture is even less clear in the clinical sciences. Ioannidis found that of 49 highly cited original research publications, seven were refuted by newer studies, and seven suggested higher efficacy than follow-up results; only 22 were successfully replicated.8 The National Institutes of Health and the National Science Foundation have responded to this crisis by taking measures to ensure that studies funded by tax dollars are more reproducible. However, little is known about the extent to which reproducibility practices are used in clinical research.

In this study, we evaluated reproducible and transparent research practices in the pulmonology literature.11 Our goals were (i) to determine areas of strength and weakness in current use of reproducible and transparent research practices and (ii) to establish a baseline for subsequent investigations of the pulmonology literature.

MethodsThis observational study employed a cross-sectional design. We used the methodology of Hardwicke et al.,11 with modifications. In reporting this study, we follow the guidelines for meta-epidemiological methodology research9 and the Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA).10 This study did not satisfy the regulatory definition for human subjects research as specified in the Code of Federal Regulations and therefore was not subject to institutional review board oversight. We have listed our protocol, materials, and data on Open Science Framework (https://osf.io/n4yh5/).

Journal and publication selectionThe National Library of Medicine catalog was searched by DT using the subject terms tag “Pulmonary Medicine[ST]” to identify pulmonary medicine journals on May 29, 2019. To meet inclusion criteria, journals had to be published in English and be MEDLINE indexed. We obtained the electronic ISSN (or linking ISSN) for each journal in the NLM catalog meeting inclusion criteria. Using these ISSNs, we formulated a search string and searched PubMed on May 31, 2019, to locate publications published between January 1, 2014, to December 31, 2018. We then randomly selected 500 publications for data extraction using Excel’s random number function (https://osf.io/zxjd9/). We used OpenEpi version 3.0 to conduct a power analysis to estimate sample size. Data availability was the primary outcome due to its importance for study reproducibility.9 The population size of studies published in MEDLINE-indexed journals from which we selected our random sample was 299,255 with a hypothesized frequency of 18.5% for the factor in the population (which was based upon data obtained by Hardwicke et al.11); a confidence limit of 5%; and a design factor of 1. Based on these assumptions, our study would require a sample size of 232. To allow for the attrition of publications that would not meet inclusion criteria, we randomly sampled a total of 500 publications. Previous investigations, upon which this study is based, have included random samples of 250 publications in the social sciences and 150 publications in the biomedical sciences.

Extraction trainingPrior to data extraction, two investigators (JN, CS) underwent training to ensure inter-rater reliability. The training included an in-person session to review the study design, protocol, Google form, and location of the extracted data elements in two publications. The investigators were next provided with three additional publications from which to extract data. Afterward, the pair reconciled differences by discussion. This training session was recorded and deposited online for reference (https://osf.io/tf7nw/). Prior to extracting data from all 500 publications, the two investigators extracted data from the first 10, followed by a final consensus meeting. Data extraction for the remaining 490 publications followed, and a final consensus meeting was held to resolve disagreements. A third author (DT) was available for adjudication, if necessary.

Data extractionThe two investigators extracted data from the 500 publications in a duplicate and blinded fashion. A pilot-tested Google form was created from Hardwicke et al.,11 with additions (see Table 1 for a description of the indicators of reproducibility and transparency). This form prompted coders to identify whether a study had important information that needed to be reproducible (https://osf.io/3nfa5/). The extracted data varied by study design. Studies without empirical data (e.g., editorials, commentaries [without reanalysis], simulations, news, reviews, and poems) had only the publication characteristics, conflict of interest statement, financial disclosure statement, funding sources, and open access availability. Non-Empirical studies do not have the expectation of being reproduced, and as such do not contain the indicators used for this study. Empirical studies included clinical trials, cohort, case series, secondary analysis, chart review, and cross-sectional. We catalogued the most recent year and 5-year impact factor of the publishing journals. Finally, we expanded the funding options to include university, hospital, public, private/industry, or nonprofit. In order to look more in-depth at areas of pulmonology research, the journal and sub-specialty of each empirical study was analyzed.

Indicators of reproducibility.

| Reproducibility indicator | Number of studies | Role in producing transparent and reproducible science |

|---|---|---|

| Articles | ||

| Availability of article (paywall or public access) | All (n = 500) | Widely accessible articles increase transparency |

| Funding | ||

| Statement of funding sources | All included studies (n = 492) | Disclosure of possible sources of bias |

| Conflict of interest | ||

| Statement of competing interests | All included studies (n = 492) | Disclosure of possible sources of bias |

| Evidence synthesis | ||

| Citations in systematic reviews or meta-analyses | Empirical studies excluding systematic reviews and meta-analyses (n = 294) | Evidence of similar studies being conducted |

| Protocols | ||

| Availability statement, and if available, what aspects of the study are included | Empirical studies excluding case studies (n = 245) | Availability of methods and analysis needed to replicate study |

| Materials | ||

| Availability statement, retrieval method, and accessibility | Empirical studies excluding case studies and systematic reviews/meta-analysis (n = 238) | Defines exact materials needed to reproduce study |

| Raw data | ||

| Availability statement, retrieval method, accessibility, comprehensibility, and content | Empirical studies excluding case studies (n = 245) | Provision of data collected in the study to allow for independent verification |

| Analysis scripts | ||

| Availability statement, retrieval method, and accessibility | Empirical studies excluding case studies (n = 245) | Provision of scripts used to analyze data |

| Preregistration | ||

| Availability statement, retrieval method, accessibility, and content | Empirical studies excluding case studies (n = 245) | Publicly accessible study protocol |

| Replication study | ||

| Is the study replicating another study, or has another study replicated the study in question | Empirical studies excluding case studies (n = 245) | Evidence of replicability of the study |

Bold values signifies to increase contrast between entries.

We used Open Access Button (http://www.openaccessbutton.org) to identify publications as being publicly available. Both the journal title and DOI were used in the search to mitigate chances of missing an article. If Open Access Button could not locate an article, we searched Google and PubMed to confirm open access status.

Publication citations included in research synthesis and replicationFor empirical studies, Web of Science was used to identify whether the publication was replicated in other studies and had been included in systematic reviews and/or meta-analyses. To accomplish these tasks, two investigators (CS, JN) inspected the titles, abstracts, and introductions of all publications in which the reference study was cited. This process was conducted in a duplicate, blinded fashion.

Data analysisWe used Microsoft Excel to calculate descriptive statistics and 95% confidence intervals (95% CIs). The Wilson’s Score for binomial proportions was used to create confidence intervals in this study.12

Role of the funding sourceThis study was funded through the 2019 Presidential Research Fellowship Mentor – Mentee Program at Oklahoma State University Center for Health Sciences. The funding source had no role in the study design, collection, analysis, interpretation of the data, writing of the manuscript, or decision to submit for publication.

ResultsStudy selection and article accessibilityOur PubMed search identified 299,255 publications. Limiting our search to articles published from January 1, 2014, to December 31, 2018, yielded 72,579 publications, from which 500 were randomly selected. Of these 500 publications, 312 were open access and 180 were behind a paywall. Eight publications could not be accessed by investigators and were thus excluded, leaving 492 for further analysis (Fig. 1). Characteristics of the included publications can be found in Table 2.

Indicators of reproducibility in pulmonology studies.

| Characteristics | N (%) | 95% CI | |

|---|---|---|---|

| Funding N = 492 | University | 8 (1.6) | 0.5−2.7 |

| Hospital | 5 (1.0) | 0.1−1.9 | |

| Public | 65 (13.2) | 10.2−16.2 | |

| Private/industry | 33 (6.7) | 4.5−8.9 | |

| Non-profit | 11 (2.2) | 0.9−3.5 | |

| No statement listed | 275 (55.9) | 51.5−60.2 | |

| Multiple sources | 41 (8.3) | 5.9−10.8 | |

| Self-funded | 1 (0.2) | 0−0.6 | |

| No funding received | 53 10.8) | 8.0−13.5 | |

| Conflict of interest statement N = 492 | Statement, one or more conflicts of interest | 98 (19.9) | 16.4−23.4 |

| Statement, no conflict of interest | 261 (53.2) | 48.8−57.5 | |

| No conflict of interest statement | 132 (26.9) | 23.0−30.8 | |

| Statement inaccessible | 1 (0.2) | 0−0.6 | |

| Data availability N = 245 | Statement, some data are available | 37 (15.1) | 12.9−18.2 |

| Statement, data are not available | 2 (0.8) | 0−1.6 | |

| No data availability statement | 206 (84.1) | 80.8−87.3 | |

| Material availability N = 238 | Statement, some materials are available | 24 (10.1) | 7.4−12.7 |

| Statement, materials are not available | 1 (0.4) | 0−1.0 | |

| No materials availability statement | 213 (89.5) | 86.8−92.2 | |

| Protocol availability N = 245 | Full protocol | 3 (1.2) | 0.3−2.2 |

| No protocol | 242 (98.8) | 97.8−99.7 | |

| Analysis scripts N = 245 | Statement, some analysis scripts are available | 2 (0.8) | 0−1.6 |

| Statement, analysis scripts are not available | 0 | 0 | |

| No analysis script availability statement | 243 (99.2) | 98.4−100 | |

| Replication studies N = 245 | Novel study | 244 (99.6) | 99.0−100 |

| Replication | 1 (0.4) | 0−1.0 | |

| Cited by systematic review or meta-analysis N = 294 | No citations | 259 (88.1) | 85.3−90.9 |

| A single citation | 20 (6.8) | 4.6−9.0 | |

| One to five citations | 14 (4.8) | 2.9−6.6 | |

| Greater than five citations | 1 (.0.3) | 0−0.8 | |

| Excluded in SR or MA | 1 (0.3) | 0−0.8 | |

| Preregistration N = 245 | Statement present, preregistered | 23 (9.4) | 6.8−11.9 |

| Statement present, not pre–registered | 4 (1.6) | 0.5−2.7 | |

| No preregistration statement | 218 (89.0) | 86.2−91.7 | |

| Frequency of reproducibility indicators in selected studies N = 301 | Number of indicators present in study | ||

| 0 | 49 (16.3) | — | |

| 1 | 119 (39.5) | — | |

| 2−5 | 133 (44.2) | — | |

| 6−8 | 0 | — | |

| Open access N = 492 | Found via open access button | 215 (43.7 | 39.4−48.0 |

| Yes-found article via other means | 97 (19.7) | 16.2−23.2 | |

| Could not access through paywall | 180 (36.6) | 32.4−40.8 | |

Fig. 2 depicts an overview of our study results. A total of 238 empirical studies (excluding 56 case studies/case series, six meta-analyses, and one systematic review) were evaluated for material availability. The majority of studies offered no statement regarding availability of materials (n = 213; 89.50% [95% CI, 86.81%–92.18%]). Twenty-four studies (10.08% [7.44%–12.72%]) had a clear statement regarding the availability of study materials. One study (0.42% [0%–0.99%]) included an explicit statement that the materials were not publicly available. Eighteen of the 24 materials files were accessible; the remaining six either led to a broken URL link or a pay-walled request form.

A total of 245 empirical studies (excluding 56 case studies/case series) were assessed for availability of protocols, raw data, and analysis scripts. Three studies provided access to a protocol (1.22% [0.26%–2.19%]). Data availability statements were more common, with 37 studies (15.10% [11.96%–18.24%]) including a statement that at least partial data were available. Analysis scripts were found in two studies (0.82% [0.03%–1.61%]). More information on these metrics is presented in Supplemental Table 1 & 2.

Study preregistrationA total of 245 empirical studies (excluding 56 case studies/case series) were searched for a statement regarding study preregistration. Few studies included statements: 23 (9.39% [6.83%–11.94%]) declared preregistration, while four (1.63% [0.52%–2.74%]) explicitly disclosed that they were not preregistered. More information on preregistration is presented in Supplemental Table 1.

Study replication and citation analysisOf 245 empirical studies analyzed, only one (0.41% [0%–0.97%]) reported replication of the methods of a previously published study. No studies were cited by a replication study. A total of 294 of the 301 empirical studies (excluding six meta-analyses and one systematic review) were evaluated to determine whether any had been included in a systematic review or meta-analysis. Twenty studies (6.80% [4.60%–9.01%]) were cited once in a systematic review or meta-analysis, 14 studies (4.76% [2.90%–6.63%]) were cited in two to five systematic reviews or meta-analyses, and one study (0.34% [0%–0.85%]) was cited in more than five systematic reviews or meta-analyses. One study (0.34% [0%–0.85%]) was explicitly excluded from a systematic review.

Conflict of interest and funding disclosuresAll 492 publications were assessed for their inclusion of a conflict of interest statement and/or a funding statement. A majority (n = 359; 73.08%) included a conflict of interest statement, with 261 declaring no competing interests (53.16% [48.78%–57.53%]). More than half of the publications failed to provide a funding statement (n = 275; 55.89%; Table 2). In publications with a funding statement, public funding was the most common source (n = 65; 13.21%).

Journal and sub-specialty characteristicsThe total number of studies sampled from each journal is listed in Table 3 with the average number of reproducibility indicators with it. All 58 journals had at least one publication with empirical data and The Annals of Thoracic Surgery had the most with 33. The subspecialties of pulmonology are listed in Table 4 with the number of publications and average reproducibility indicators. Notable subjects were 102 in interventional pulmonology, 66 in obstructive lung disease, and 57 in critical care medicine. Publications over pulmonary hypertension averaged the most reproducibility indicators at 2.

Number of studies per pulmonology subspecialty and mean number of reproducibility indicators.

| Pulmonology subspecialty | Number of studies | Mean number of reproducibility indicators |

|---|---|---|

| Interventional pulmonology | 102 | 0.98 |

| Tobacco treatment | 3 | 1 |

| Lung transplantation | 8 | 1.13 |

| Sarcoidosis | 4 | 1.25 |

| Neuromuscular disease | 3 | 1.33 |

| Cystic fibrosis | 9 | 1.44 |

| Critical care medicine | 57 | 1.47 |

| Lung cancer | 27 | 1.52 |

| Obstructive lung disease | 66 | 1.76 |

| Interstitial lung disease | 9 | 1.78 |

| Sleep medicine | 9 | 1.89 |

| Pulmonary hypertension | 4 | 2 |

Number of studies per journal and mean number of reproducibility indicators.

| Journal title | Number of studies | Mean number of reproducibility indicators |

|---|---|---|

| Journal of cardiothoracic and vascular anesthesia | 12 | 0.25 |

| The annals of thoracic surgery | 33 | 0.33 |

| Respiration; international review of thoracic diseases | 3 | 0.67 |

| Respirology | 3 | 0.67 |

| The thoracic and cardiovascular surgeon | 7 | 0.86 |

| Respiratory investigation | 1 | 1 |

| Annals of thoracic and cardiovascular surgery | 4 | 1 |

| Canadian respiratory journal | 2 | 1 |

| Seminars in thoracic and cardiovascular surgery | 1 | 1 |

| Jornal Brasileiro de pneumologia | 2 | 1 |

| Journal of thoracic imaging | 1 | 1 |

| Respiratory care | 3 | 1 |

| Current allergy and asthma reports | 1 | 1 |

| European respiratory review | 1 | 1 |

| General thoracic and cardiovascular surgery | 2 | 1 |

| Journal of bronchology & interventional pulmonology | 6 | 1.17 |

| Annals of the American thoracic society | 12 | 1.7 |

| Respiratory physiology & neurobiology | 4 | 1.25 |

| Thoracic cancer | 4 | 1.25 |

| The journal of heart and lung transplantation | 4 | 1.25 |

| Heart, lung & circulation | 7 | 1.29 |

| The European respiratory journal | 7 | 1.29 |

| Lung | 3 | 1.33 |

| Pulmonary pharmacology & therapeutics | 3 | 1.33 |

| Journal of cystic fibrosis | 6 | 1.33 |

| American journal of physiology. lung cellular and molecular physiology | 3 | 1.33 |

| Interactive cardiovascular and thoracic surgery | 8 | 1.38 |

| The journal of thoracic and cardiovascular surgery | 13 | 1.385 |

| The clinical respiratory journal | 5 | 1.4 |

| Tuberculosis (Edinburgh, Scotland) | 5 | 1.4 |

| Journal of breath research | 5 | 1.4 |

| Experimental lung research | 2 | 1.5 |

| The journal of asthma | 4 | 1.5 |

| Clinical lung cancer | 2 | 1.5 |

| The international journal of tuberculosis and lung disease | 5 | 1.6 |

| Respiratory medicine | 5 | 1.6 |

| Chest | 5 | 1.6 |

| European journal of cardio-thoracic surgery | 9 | 1.67 |

| Thorax | 7 | 1.71 |

| Journal of cardiothoracic surgery | 4 | 1.75 |

| Respiratory research | 9 | 1.78 |

| Annals of allergy, asthma & immunology | 10 | 1.8 |

| American journal of respiratory and critical care medicine | 5 | 1.8 |

| Asian cardiovascular & thoracic annals | 8 | 2 |

| Journal of cardiopulmonary rehabilitation and prevention | 1 | 2 |

| Heart & lung : the journal of critical care | 1 | 2 |

| Sleep & breathing | 7 | 2 |

| Allergy and asthma proceedings | 4 | 2 |

| Chronic respiratory disease | 2 | 2 |

| International journal of chronic obstructive pulmonary disease | 10 | 2 |

| Multimedia manual of cardiothoracic surgery | 1 | 2 |

| The Lancet. Respiratory medicine | 4 | 2 |

| Pediatric pulmonology | 8 | 2.13 |

| Journal of aerosol medicine and pulmonary drug delivery | 5 | 2.2 |

| BMC pulmonary medicine | 4 | 2.25 |

| Journal of thoracic oncology | 5 | 2.8 |

| NPJ primary care respiratory medicine | 1 | 3 |

| COPD | 2 | 3.5 |

In this cross-sectional review of pulmonology publications, a substantial majority failed to provide materials, participant data, or analysis scripts. Many were not preregistered and few had an available protocol. Reproducibility has been viewed as an increasingly troublesome area of study methodology.13 Recent attempts at reproducing preclinical14,15 and clinical studies have found that only 25%–61% of studies may be successfully reproduced.6,16 Within the field of critical care medicine, a recent publication found that only 42% of randomized trials contained a reproduction attempt with half of those reporting inconsistent results compared to the original.17 Many factors contribute to limited study reproducibility, including poor (or limited) reporting of study methodology, prevalence of exaggerated statements, and limited training on experimental design in higher education.18 In an effort to limit printed pages and increase readability, journals may request that authors abridge methods sections.19 Here, we briefly comment on selected indicators to present a balanced view of the perspectives of those in favor of reproducibility and transparency and those who resist enacting such changes.

First, data sharing allows for the independent verification of study results or reuse of that data for subsequent analyses. Two sets of principles exist. The first, known as FAIR, outlines mechanisms for findability, accessibility, interoperability, and reusability. FAIR principles are intended to apply to study data as well as the algorithms, tools, and workflows that led to the data. FAIR advocates that data be accessible to the right people, in the right way, and at the right time.20 A second set of principles relate to making data available to the public for access, use, and share without licenses, copyrights, or patents.21 While we advocate for data sharing, we recognize that it is a complex issue. First, the process for making data available for others’ use requires skills. Further, the process, which includes the construction of data dictionaries and data curation, is time consuming. Furthermore, concerns exist with regard to unrestricted access to data facilitating a culture of “research parasites,” a term coined by Drazen and Longo22 that suggests that secondary researchers might exploit primary research data for publication. Drazen and Longo also cautioned that secondary authors might not understand the decisions made when defining parameters of the original investigations. Finally, the sensitive nature of some data causes concern among researchers.

Second, preregistering a study requires authors to provide their preliminary protocol, materials, and analysis plan in a publicly available website. The most common websites used by authors are ClinicalTrials.gov and the International Clinical Trial Registry Platform hosted by the World Health Organization. These registries improve the reliability and transparency of published findings by preventing selective reporting of results, preventing unnecessary duplication of studies, and providing relevant material to patients that may enroll in such trials.23 The Food and Drug Administration (FDA) Amendments Act and the International Committee of Medical Journal Editors (ICMJE) have both required registration of clinical trials prior to initiation of a study.24,25 Selective reporting bias, which includes demoting primary endpoints, omitting endpoints, or upgrading secondary endpoints in favor of statistical significance, may be especially pervasive and problematic. Numerous studies across several fields of medicine have evaluated the extent and magnitude of the problem.26–28 The consequences of selective reporting bias and manipulation of endpoints may compromise clinical decision making. Another issue—p-hacking—occurs when researchers repeatedly analyze study data until they achieve statistically significant results. Preregistration of protocols and statistical analysis plans can be used to fact check published papers to ensure that any alterations made in the interim were made for good reason.

Third, transparency related to study funding and financial conflicts of interest should be emphasized. In a previous study, we found that one-third of the authors of pivotal oncology trials underlying FDA drug approvals failed to adequately disclose personal payments from the drug sponsor.29 Recent news accounts of a prominent breast cancer researcher who failed to disclose financial relationships with pharmaceutical companies in dozens of publications has heightened awareness of the pervasiveness of this issue.30 The ICMJE considers willful nondisclosure of financial interests to be a form of research misconduct.31 It is critical that the public be able to adequately evaluate financial relationships of the authors of the published studies in order to evaluate the likelihood of biased results and conclusions.

Several changes are needed to establish a culture of reproducibility and transparency. First, increased awareness of and training about these issues are needed. The National Institutes of Health has funded researchers to produce training and materials, which are available on the Rigor and Reproducibility Initiative website,32 but more remains to be done. Strong mentorship is necessary to encourage trainees to adopt and incorporate reproducible research practices. Research on mentorship programs has found that trainees who have mentors report greater satisfaction with time allocation at work and increased academic self-efficacy compared with trainees without a mentor.33 Conversely, poor mentorship can reinforce poor research practices among junior researchers, such as altering data to produce positive results or changing how results are reported.34 Other research stakeholders must be involved as well. Although many journals recommend the use of reporting guidelines for various study designs, such as CONSORT and PRISMA, evidence suggests that these guidelines are not followed by authors or enforced by journals.35 When journals enforce adherence to reporting guidelines, the completeness of reporting is improved.36 Detractors of reporting guidelines are concerned that certain checklists (CONSORT, STROBE, STARD) will be used to judge research quality rather than improve writing clarity, that editors and peer reviewers will fail to enforce these guidelines, and that insufficient research exists to evaluate the outcomes from applying these guidelines.37 We analyzed COPD, NPJ Primary Care Respiratory Medicine, and Journal of Thoracic Oncology from our sample as the top three journals for containing reproducibility indicators in their publications. These journals have explicit instructions for authors to provide things such as materials/protocols such that independent researchers may recreate the study or raw data to confirm calculations.38–40 Although reproducibility may be an emerging topic, these recommendations appear to be encouraging authors to include more thorough and complete research.

Our study has both strengths and limitations. We randomly sampled a large number of pulmonology journals containing various types of publications to generalize our findings across the specialty. Our study design also used rigorous training sessions and a standardized protocol to increase the reliability of our results. In particular, our data extraction process, which involved blinded and duplicate extraction by two investigators, is the gold standard systematic review methodology and is recommended by the Cochrane Collaboration.41 We have made all study materials available for public review to enhance the reproducibility of this study. Regarding limitations, our inclusion criteria for journals (i.e., published in English and MEDLINE indexed) potentially removed journals that contained more lax recommendations regarding indicators of reproducibility and transparency. Furthermore, although we obtained a random sample of publications for analysis, our sample may not have been representative of all pulmonology publications. Our results should be interpreted in light of these strengths and limitations.

In conclusion, our study of the pulmonology literature found that reproducible and transparent research practices are not being incorporated into research. Sharing of study artifacts, in particular, needs improvement. The pulmonology research community should seek to establish norms of reproducible and transparent research practices.

Author contributionsDJT, MV: Substantial contributions to the conception and design of the work. CAS, JN, DJT: Acquisition, analysis, and interpretation of data for the work. CAS, JN, DJT, TEH, JP, KC, MV: Drafted the work and revised it critically for important intellectual content. MV: Final approval of the version submitted for publication. CAS: Accountability for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Conflicts of interestThe authors have no conflicts of interest to declare.

This study was funded through the 2019 Presidential Research Fellowship Mentor – Mentee Program at Oklahoma State University Center for Health Sciences.